Your traffic’s decent, but conversions? Flat.

Your blog ranks. Your landing pages get visits. But trial signups? Demo requests? Crickets.

If that sounds familiar, the problem isn’t traffic. It’s that your pages aren’t convincing visitors to take the next step.

That’s where SEO A/B testing comes into play—a powerful yet underutilized, data-driven technique to turn passive traffic into actual growth.

By testing headlines, CTAs, and or even content structure, SaaS marketers can find out what actually improves trial activations, demo bookings, and user intent In fact, four out of five SEO professionals have witnessed an increase in organic traffic after running an A/B test.

Still 68% of the companies skip it—often because they think it’s too complex, too slow, or only meant for traffic boosts.

This post explains what SEO split testing actually is and how it’s different from traditional A/B testing for UX. By the end, SaaS teams will understand how to implement it without slowing down engineering or growth.

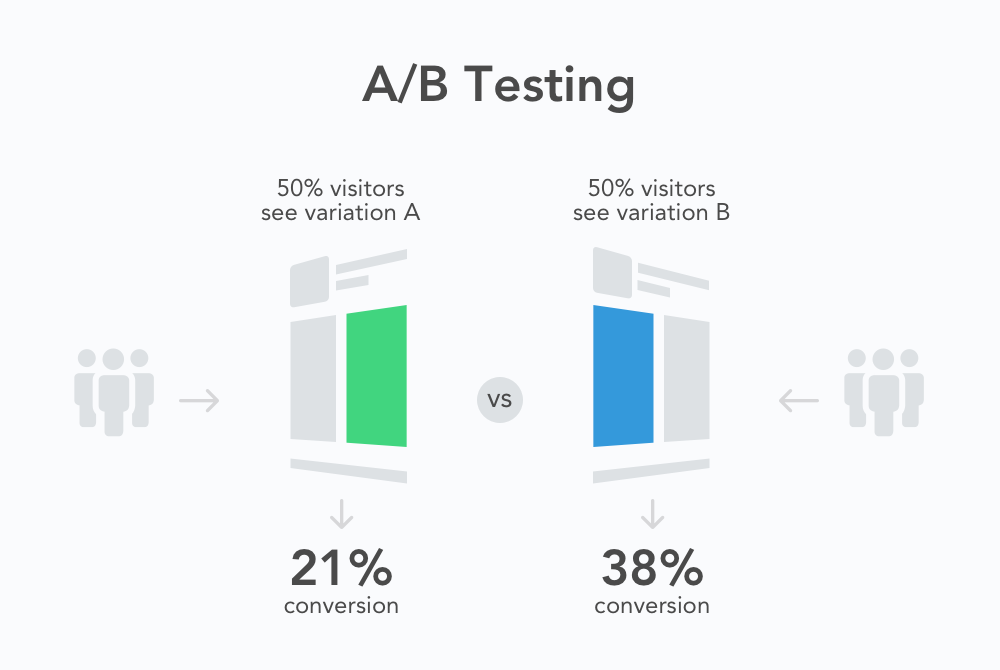

SEO A/B testing, or split testing, is about making controlled changes—like tweaking H1s, rewriting meta titles, adding structured data, or adjusting internal links—to some of your webpages and then comparing their performance against unchanged pages.

Instead of guessing what might improve your rankings, A/B testing shows how those changes impact your search rankings and organic traffic.

Here’s how it works: Select a group of similar, high-traffic pages—say, 50 blog posts or 20 feature landing pages. Then, split them into two statistically similar groups. In one group (the variant), you apply a specific SEO change. The other group (the control) stays exactly the same.

Over the next few weeks, track impressions, average ranking position, and click-through rate (CTR) for both groups using tools like Google Search Console, SearchPilot, or SplitSignal. And compare results to see if the changes improved search performance.

Most importantly, SEO A/B testing is not the same as A/B testing for users.

SEO A/B testing targets changes that impact how Google crawls and ranks your pages, like meta tags, structured data, or internal links. On the other hand, user A/B testing focuses on front-end elements like button text or layout that affect conversions after the click.

A well-structured SEO test can give you clear answers and minimize the risk of misleading results or unintended side effects. Here’s how to get it right:

Start every SEO test by picking pages that are similar in template and purpose, so you're testing only one thing at a time.

If you mix different types of pages, like product pages and blog posts, variations in audience or structure could throw off your results. On the other hand, sticking to one page type keeps the test consistent and the data reliable.

For example, you could test all “destination city” pages on a travel site or a set of product category pages in an e-commerce store.

Other considerations for selecting pages:

Every effective SEO test starts with a strong hypothesis. Without it, you’re not experimenting, you’re just making random changes.

A solid hypothesis clearly states what you’re changing and what outcome you expect—based on data, user behavior, or SEO logic. It keeps your test focused, sets clear success criteria, and ensures you’re proving a specific theory instead of guessing what might work.

Try using this format: “We believe that doing [change] on [page type] will result in [expected outcome] because **[rationale].”

For example:

Tips to create a hypothesis statement properly:

Even a perfectly planned test can produce unreliable data if your control and variant groups aren’t properly balanced. So, make sure both have similar traffic, content, and user intent, so any difference in performance is due to the change, not unrelated factors.

Here’s how to bucket effectively:

Tip: If your control group already outperforms the variant before the test starts, that’s a red flag. Re-bucket and re-balance before rolling anything out.

This step is straightforward: apply your SEO change only to the variant bucket pages, and leave the control pages unaltered. How you implement will depend on your site’s architecture, but some common methods include:

After implementation, you’ll have two template versions—one for control pages and one for variant pages. But each URL will only display one version at a time, so Google won’t see conflicting content on the same page.

This is where you find out if your hypothesis was correct. Your job here is to collect data over the test period and analyze the difference between your control and variant groups. Here’s how to approach it:

For example, if the variant group was expected to get 9,500 visits but ends up with 11,000, while the control group hits its forecasted 10,000—that’s a strong indicator your change worked.

Well, the truth is: not all SEO test methodologies give you reliable results.

Use the wrong approach, and it might lead to false positives—or worse, wasted time on changes that don’t work. Understanding the strengths and limits of each methodology helps you make confident, data-backed decisions that actually improve rankings and traffic.

Strategy Tip: If you're not sure where to start, use an observational study to identify patterns, then validate them with a quasi-experiment. Save RCTs for big-ticket changes you plan to scale.

A randomized controlled experiment (RCT) is the most robust method for A/B testing SEO. You take a set of similar pages, randomly split them into two groups (control vs variant), and apply your changes to just one group.

Because the split is random, any differences in performance are more likely to be caused by the change itself, not external factors..

Example: You have 500 category pages. You randomly split them into two groups: 250 variant, 250 control. Both groups have similar organic traffic before the test (this confirms the randomization worked).

You add an FAQ section to the 250 variant pages. The control group stays the same. Over the next few weeks, you track traffic and rankings. If the variant group performs better consistently, the change worked.

Not every SEO test can be a perfectly randomized controlled experiment. Sometimes, limitations in traffic, page count, or setup lead marketers to rely on alternative methods. They’re easier to run, but often less reliable. Here’s how they work:

Since these groups weren’t randomly chosen and may behave differently by default, it’s hard to know if your change made the impactor if it was just page type, seasonality, or other hidden factors.

Observational tests don't involve any changes. You simply analyze existing page performance like traffic, rankings, and engagement, to identify patterns or relationships. It’s a great way to uncover early insights or validate assumptions before running a real test.

Two common observational studies include:

For example, you might check the top 10 Google results for 100 keywords and note things like word count, keyword in the title, or domain authority. If 80% of the top pages have the keyword in their URL, you might think that’s important. But remember, it doesn’t prove that having the keyword in the URL causes higher rankings.

SEO A/B testing is totally legit, as long as it’s done with care. Here are the key dos and don’ts for SEO A/B testing:

Cloaking means showing one thing to Googlebot and a different thing to human users (or even showing different content to different users based on user-agent). This is a big no-no in Google’s eyes. Always ensure the content you test is visible to both users and search engines. That includes structured data, text, and on-page elements.

If your test runs on separate URLs (e.g., a staging subdomain), add a canonical tag on the variant pointing to the original page. This tells Google which version to index and avoids duplicate content issues.

A 302 redirect tells Google the move is temporary, so it keeps indexing the original URL and preserves its rankings. A 301 (permanent) redirect might confuse things by signaling the original is gone. .

End the test once you have clear results. Don’t let it drag on—prolonged testing, especially with uneven exposure, can appear deceptive. A few weeks to a couple of months is usually enough. Afterward, remove test scripts, flags, and unused URLs to avoid clutter and confusion.

While SEO tests focus on Google, remember real visitors are still browsing those pages. Don’t implement keyword-stuffed blocks, jarring layouts, or unnatural CTAs that harms user experience on variant pages.

SEO A/B testing is a powerful way to drive data-backed growth. Instead of guessing which changes improve rankings or drive traffic, you can test and track the real A/B testing impact on SEO—from higher click-through rates to better keyword positions.

But like any true experiment, it takes planning, precision, and patience to get right. It’s easy to set up wrong, misread the data, or over-index on vanity metrics.

TripleDart is a specialized SaaS SEO agency that offers a unique blend of technical SEO expertise and data-driven content strategy to drive results. From identifying high-impact SEO test ideas to implementing them safely and analyzing the outcomes, we make SEO experimentation scalable and strategic.

Want to test your way to real growth? Let’s talk.

Google treats test pages like any other, as long as the test is set up correctly. Cloaking happens when Googlebot sees different content than users. Ensure your test content is visible to both Googlebot and users to avoid cloaking.

An SEO traffic forecast helps predict what traffic would have been without the change, giving you a baseline to compare actual results. It allows you to measure the true impact of your test and ensures any traffic differences are due to the change, not external factors.

No, it’s not. You need to account for external factors, like seasonality or algorithm updates, that can affect traffic. A traffic forecast helps isolate the impact of your changes by showing what would have happened without the test.

SEO experiments typically run for 2-4 weeks to gather enough data and reach statistical significance. The duration allows you to capture patterns across different cycles (like days of the week) and ensures reliable results without rushing.

Yes, especially for tracking changes like button clicks or on-page interactions. However, for larger-scale tests, like changes to content or page structure, it’s better to implement the changes directly in your site’s code or CMS to ensure full control over the test setup.

Join 70+ successful B2B SaaS companies on the path to achieving T2D3 with our SaaS marketing services.